简介(未完成)

AutoGen v0.2

AutoGen 代理是可定制的、可对话的,并且无缝地允许人类参与。in AutoGen

- 收发消息、生成回复:an agent is an entity that can send messages, receive messages and generate a reply using models, tools, human inputs or a mixture of them. This abstraction not only allows agents to model real-world and abstract entities, such as people and algorithms, but it also simplifies implementation of complex workflows as collaboration among agents.

- 可扩展、可组合:Further, AutoGen is extensible and composable: you can extend a simple agent with customizable components and create workflows that can combine these agents and power a more sophisticated agent, resulting in implementations that are modular and easy to maintain.

一个agent 具备干活儿所需的所有必要资源:An example of such agents is the built-in ConversableAgent which supports the following components:

- A list of LLMs

- A code executor

- A function and tool executor

- A component for keeping human-in-the-loop You can switch each component on or off and customize it to suit the need of your application. For advanced users, you can add additional components to the agent by using registered_reply.

核心概念

AutoGen先声明了一个Agent的协议(protocol),规定了作为一个Agent基本属性和行为:

- name属性:每个Agent必须有一个属性。

- description属性:每个Agent必须有个自我介绍,描述自己的能干啥和一些行为模式。

- send方法:发送消息给另一个Agent。

- receive方法:接收来自另一个代理的消息Agent。

- generate_reply方法:基于接收到的消息生成回复,也可以同步或异步执行。

class Agent:

def __init__(self, name: str,):

self._name = name

def send(self, message: Union[Dict, str], recipient: "Agent", request_reply: Optional[bool] = None):

"""(Abstract method) Send a message to another agent."""

def receive(self, message: Union[Dict, str], sender: "Agent", request_reply: Optional[bool] = None):

"""(Abstract method) Receive a message from another agent."""

def generate_reply(self,messages: Optional[List[Dict]] = None,sender: Optional["Agent"] = None,**kwargs,) -> Union[str, Dict, None]:

"""(Abstract method) Generate a reply based on the received messages."""

class ConversableAgent(Agent):

def __init__( self,name: str,system_message,...):

self._oai_messages = ... # Dict[Agent, List[Dict]] 存储了Agent 发来的消息(也包含自己的发出的消息?)

def register_reply(self,trigger,reply_func,...):

"""Register a reply function.The reply function will be called when the trigger matches the sender."""

def initiate_chat( self, recipient: "ConversableAgent", clear_history,...)

"""Initiate a chat with the recipient agent."""

def register_for_llm(self,*,name,description, api_style: Literal["function", "tool"] = "tool",) -> Callable[[F], F]:

"""Decorator factory for registering a function to be used by an agent."""

# 数据进入到 self.llm_config["functions"] 或 self.llm_config["tools"]

def register_for_execution( self, name: Optional[str] = None, ) -> Callable[[F], F]:

"""Decorator factory for registering a function to be executed by an agent."""

# 数据进入到 self._function_map

ConversableAgent核心是receive,当ConversableAgent收到一个消息之后,它会调用generate_reply去生成回复,然后调用send把消息回复给指定的接收方。同时ConversableAgent还负责实现对话消息的记录,一般都记录在ChatResult里,还可以生成摘要等。

一个开启llm,关闭code executor、第三方工具、不需要人输入的agent

import os

from autogen import ConversableAgent

agent = ConversableAgent(

"chatbot",

llm_config={"config_list": [{"model": "gpt-4", "api_key": os.environ.get("OPENAI_API_KEY")}]},

code_execution_config=False, # Turn off code execution, by default it is off.

function_map=None, # No registered functions, by default it is None.

human_input_mode="NEVER", # Never ask for human input.

)

reply = agent.generate_reply(messages=[{"content": "Tell me a joke.", "role": "user"}])

print(reply)

AutoGen在ConversableAgent的基础上实现了AssistantAgent,UserProxyAgent和GroupChatAgent三个比较常用的Agent类,这三个类在ConversableAgent的基础上添加了特定的system_message和description。其实就是添加了一些基本的prompt而已。

一个绑定了角色的Agent. assign different roles to two agents by setting their system_message.

cathy = ConversableAgent(

"cathy",

system_message="Your name is Cathy and you are a part of a duo of comedians.",

llm_config={"config_list": [{"model": "gpt-4", "temperature": 0.9, "api_key": os.environ.get("OPENAI_API_KEY")}]},

human_input_mode="NEVER", # Never ask for human input.

)

joe = ConversableAgent(

"joe",

system_message="Your name is Joe and you are a part of a duo of comedians.",

llm_config={"config_list": [{"model": "gpt-4", "temperature": 0.7, "api_key": os.environ.get("OPENAI_API_KEY")}]},

human_input_mode="NEVER", # Never ask for human input.

)

# 让两人说一段喜剧show

result = joe.initiate_chat(cathy, message="Cathy, tell me a joke.", max_turns=2)

决定Agent的行为模式就有几点关键:

- 提示词,system_message,就是这个Agent的基本提示词

- 能调用的工具,取决于给Agent提供了哪些工具,在AssistantAgent默认没有配置任何工具,但是提示它可以生成python代码,交给UserProxyAgent来执行获得返回,相当于变相的拥有了工具

- 回复逻辑,如果你希望在过程中掺入一点私货而不是完全交给LLM来决定,那么可以在generate_reply的方法中加入一点其他逻辑来控制Agent返回回复的逻辑,例如在生成回复前去查询知识库,参考知识库的内容来生成回复。

群聊

除了两个agent 互相对话之外,支持多个agent群聊(Group Chat),An important question in group chat is: What agent should be next to speak? To support different scenarios, we provide different ways to organize agents in a group chat: We support several strategies to select the next agent: round_robin, random, manual (human selection), and auto (Default, using an LLM to decide). Group Chat由GroupChatManager来进行的,GroupChatManager也是一个ConversableAgent的子类。它首选选择一个发言的Agent,然后发言Agent会返回它的响应,然后GroupChatManager会把收到的响应广播给聊天室内的其他Agent,然后再选择下一个发言者。直到对话终止的条件被满足。GroupChatManager选择下一个发言者的方法有:

- 自动选择: “auto”下一个发言者由LLM来自动选择;

- 手动选择:”manual”由用户输入来决定下一个发言者;

- 随机选择:”random”随机选择下一个发言者;

- 顺序发言:”round_robin” :根据Agent顺序轮流发言

- 自定义的函数:通过调用函数来决定下一个发言者;

如果聊天室里Agent比较多,还可以通过设置GroupChat类中设置allowed_or_disallowed_speaker_transitions参数来规定当特定的Agent发言时,下一个候选的Agent都有哪些。例如可以规定AgentB发言时,只有AgentC才可以响应。通过这些发言控制的方法,可以将Agent组成各种不同的拓扑。例如层级化、扁平化。甚至可以通过传入一个图形状来控制发言的顺序。

AutoGen允许在一个群聊中,调用另外一个Agent群聊来执行对话(嵌套对话Nested Chats)。这样做可以把一个群聊封装成单一的Agent,从而实现更加复杂的工作流。

AutoGen v0.4

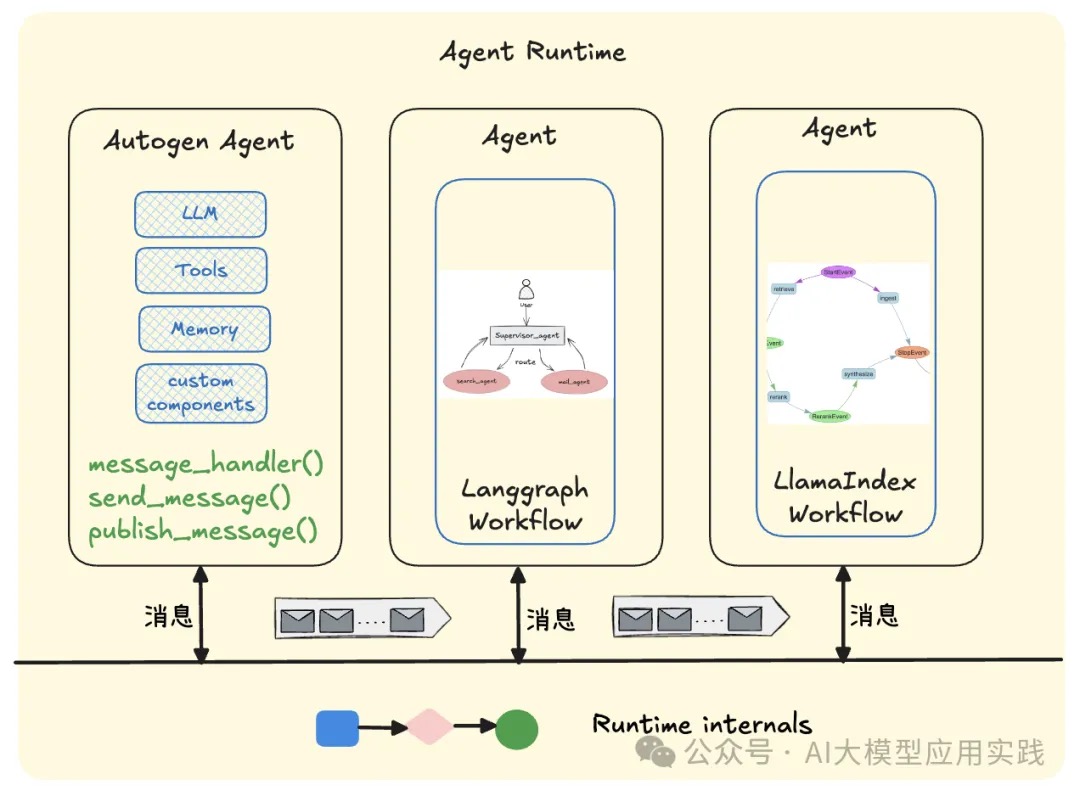

AutoGen0.4与旧版本最大的区别在于:提供了一种更底层的,快速构建消息驱动的、分布式、可扩展的多Agent系统的框架与组件,即AutoGen-core。AutoGen-Core提供了多Agent的基础管理与运行环境。

- Agents are developed by using the Actor model.

- the framework provides a communication infrastructure, and the agents are responsible for their own logic. We call the communication infrastructure an Agent Runtime.Agent runtime is a key concept of this framework. Besides delivering messages, it also manages agents’ lifecycle. So the creation of agents are handled by the runtime.

- An agent is a software entity that communicates via messages, maintains its own state, and performs actions in response to received messages or changes in its state. These actions may modify the agent’s state and produce external effects, such as updating message logs, sending new messages, executing code, or making API calls.

整体设计

- AutoGen Core

- autogen_core.base:定义了 Agent、Message 和 Runtime 时的核心接口和基础类。这个层次是框架的基础,其他层次会依赖于它。

- autogen_core.application:提供了 Runtime 的具体实现以及 Multi-Agent 应用程序所需的工具,如日志记录等。

- autogen_core.components:提供了构建 Agent 的可重用组件,包括 type-routed agent、model client、tools、代码运行沙箱和 memory。

- AgentChat 是一个用于构建多智能体应用的高级 API。它建立在 autogen-core 包之上。

- Extensions AutoGen 框架将官方实现和社区实现进行了一些分离,以插件的形式进行。官方维护框架的核心功能,而社区维护生态。

AutoGen-Core示例代码

定义两个Agent,ManagerAgent与WorkerAgent。ManagerAgent在收到任务消息(Hello World)后,会转发给Worker完成,并获得反馈。

from dataclasses import dataclass

@dataclass

class MyTextMessage:

content: str

from autogen_core import AgentId, MessageContext, RoutedAgent, message_handler

class MyWorkerAgent(RoutedAgent):

def __init__(self) -> None:

super().__init__("MyWorkerAgent")

@message_handler # 每个message_handler都会收到两个输入参数:消息体与消息上下文

async def handle_my_message(self, message: MyTextMessage, ctx: MessageContext) -> MyTextMessage:

print(f"{self.id.key} 收到来自 {ctx.sender} 的消息: {message.content}\n")

return MyTextMessage(content="OK, Got it!")

class MyManagerAgent(RoutedAgent):

def __init__(self) -> None:

super().__init__("MyManagerAgent")

# 由于其需要将任务消息转发给WorkerAgent,因此在初始化时,会保留指向WorkerAgent的引用

# 指定一个AgentId即可,Agent实例由Runtime在必要时自动创建

self.worker_agent_id = AgentId('my_worker_agent', 'worker')

@message_handler

async def handle_my_message(self, message: MyTextMessage, ctx: MessageContext) -> None:

print(f"{self.id.key} 收到消息: {message.content}\n")

print(f"{self.id.key} 发送消息给 {self.worker_agent_id}...\n")

response = await self.send_message(message, self.worker_agent_id)

print(f"{self.id.key} 收到来自 {self.worker_agent_id} 的消息: {response.content}\n")

from autogen_core import SingleThreadedAgentRuntime

import asyncio

async def main():

#创建runtime,并注册agent类型,并负责启动与停止

runtime = SingleThreadedAgentRuntime()

await MyManagerAgent.register(runtime, "my_manager_agent", lambda: MyManagerAgent()) # 注册定义好的Agent类型,并指定工厂函数用于实例化

await MyWorkerAgent.register(runtime, "my_worker_agent", lambda: MyWorkerAgent())

#启动runtime,发送消息,关闭runtime

runtime.start()

#创建agent_id,发送消息

agent_id = AgentId("my_manager_agent", "manager")

await runtime.send_message(MyTextMessage(content="Hello World!"),agent_id)

#关闭runtime

await runtime.stop_when_idle()

asyncio.run(main())

langgraph 可以看做是限定了node 输入和输出的dag Executor(step是一个func),llamaindex workflow则是事件驱动(step 限定输入输出是xxevent的func,step依托于workflow 而存在),event可以看做message通信的话,有一点消息总线的样子了。autogen 有点明确了提出了消息总线概念的样子,step是一个独立的类message_handler(意味着可以各方便的复用),只与runtime交互。